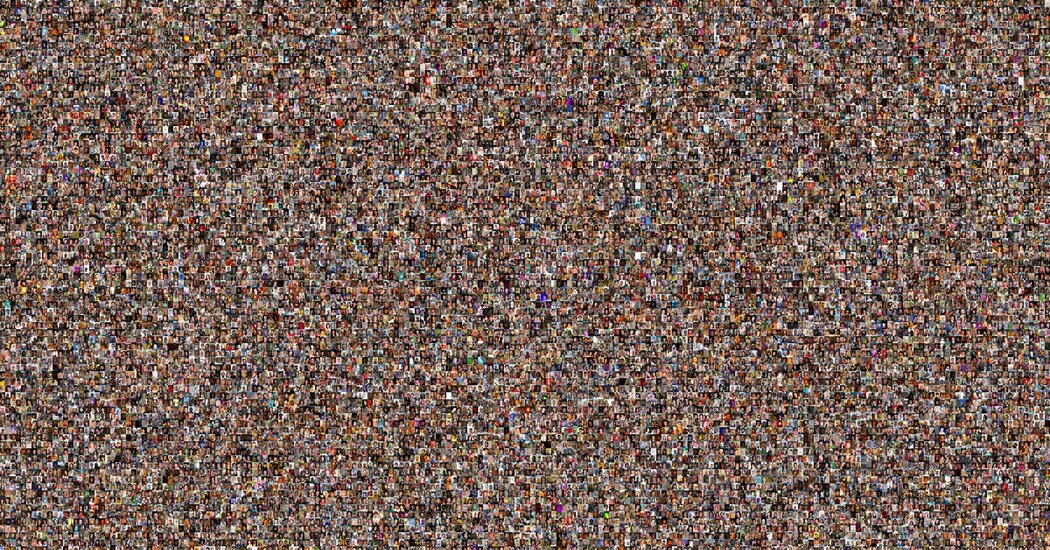

When tech companies established the facial recognition methods that are swiftly remaking govt surveillance and chipping absent at personalized privacy, they may perhaps have been given aid from an unanticipated resource: your face.

Businesses, universities and authorities labs have utilised thousands and thousands of pictures gathered from a hodgepodge of on-line sources to build the technological innovation. Now, scientists have designed an on the internet instrument, Exposing.AI, that lets persons research lots of of these image collections for their aged pictures.

The instrument, which matches illustrations or photos from the Flickr online picture-sharing company, gives a window onto the wide amounts of info necessary to develop a large range of A.I systems, from facial recognition to on the web “chatbots.”

“People need to have to understand that some of their most intimate moments have been weaponized,” said 1 of its creators, Liz O’Sullivan, the technologies director at the Surveillance Technological innovation Oversight Job, a privacy and civil legal rights team. She helped generate Exposing.AI with Adam Harvey, a researcher and artist in Berlin.

Units employing artificial intelligence do not magically turn out to be wise. They study by pinpointing styles in details produced by individuals — images, voice recordings, guides, Wikipedia content articles and all sorts of other material. The technology is acquiring improved all the time, but it can find out human biases against ladies and minorities.

People could not know they are contributing to A.I. instruction. For some, this is a curiosity. For other people, it is enormously creepy. And it can be against the law. A 2008 legislation in Illinois, the Biometric Info Privateness Act, imposes monetary penalties if the face scans of inhabitants are utilized with out their consent.

In 2006, Brett Gaylor, a documentary filmmaker from Victoria, British Columbia, uploaded his honeymoon shots to Flickr, a well known support then. Almost 15 a long time afterwards, applying an early edition of Exposing.AI supplied by Mr. Harvey, he learned that hundreds of all those pics had created their way into several info sets that may well have been utilized to train facial recognition systems all around the planet.

Flickr, which was bought and marketed by a lot of providers more than the many years and is now owned by the photograph-sharing assistance SmugMug, permitted customers to share their images underneath what is referred to as a Inventive Commons license. That license, widespread on world wide web web pages, intended many others could use the pictures with sure limits, nevertheless these limitations may well have been overlooked. In 2014, Yahoo, which owned Flickr at the time, made use of numerous of these photographs in a knowledge established meant to support with work on computer vision.

Mr. Gaylor, 43, wondered how his pics could have bounced from place to put. Then he was advised that the pics may possibly have contributed to surveillance techniques in the United States and other nations around the world, and that just one of these units was made use of to monitor China’s Uighur populace.

“My curiosity turned to horror,” he claimed.

How honeymoon pictures helped construct surveillance techniques in China is, in some ways, a tale of unintended — or unanticipated — effects.

Yrs in the past, A.I. scientists at leading universities and tech providers commenced gathering electronic pictures from a large variety of sources, which includes picture-sharing products and services, social networks, relationship websites like OkCupid and even cameras installed on university quads. They shared people photographs with other businesses.

That was just the norm for researchers. They all necessary information to feed into their new A.I. programs, so they shared what they had. It was usually legal.

1 case in point was MegaFace, a information set created by professors at the College of Washington in 2015. They designed it devoid of the awareness or consent of the men and women whose visuals they folded into its great pool of photographs. The professors posted it to the internet so others could down load it.

MegaFace has been downloaded much more than 6,000 times by organizations and authorities agencies all over the environment, in accordance to a New York Instances general public information ask for. They included the U.S. defense contractor Northrop Grumman In-Q-Tel, the financial investment arm of the Central Intelligence Agency ByteDance, the mum or dad enterprise of the Chinese social media app TikTok and the Chinese surveillance company Megvii.

Researchers crafted MegaFace for use in an educational competitiveness meant to spur the growth of facial recognition systems. It was not intended for commercial use. But only a tiny proportion of individuals who downloaded MegaFace publicly participated in the level of competition.

“We are not in a situation to talk about third-party jobs,” mentioned Victor Balta, a University of Washington spokesman. “MegaFace has been decommissioned, and MegaFace facts are no extended staying dispersed.”

Some who downloaded the facts have deployed facial recognition units. Megvii was blacklisted very last yr by the Commerce Office after the Chinese governing administration made use of its engineering to watch the country’s Uighur populace.

The University of Washington took MegaFace offline in May well, and other businesses have eradicated other details sets. But copies of these data files could be wherever, and they are likely to be feeding new exploration.

Ms. O’Sullivan and Mr. Harvey used decades making an attempt to make a device that could expose how all that information was getting made use of. It was more hard than they experienced predicted.

They wished to take someone’s image and, using facial recognition, instantaneously tell that person how numerous instances his or her face was involved in a single of these information sets. But they fearful that this sort of a tool could be utilised in negative ways — by stalkers or by businesses and country states.

“The potential for harm appeared also fantastic,” said Ms. O’Sullivan, who is also vice president of dependable A.I. with Arthur, a New York company that aids companies handle the conduct of A.I. technologies.

In the stop, they were forced to restrict how people today could lookup the software and what effects it delivered. The instrument, as it functions now, is not as effective as they would like. But the scientists apprehensive that they could not expose the breadth of the issue without having building it worse.

Exposing.AI itself does not use facial recognition. It pinpoints pictures only if you by now have a way of pointing to them on the net, with, say, an net tackle. People can search only for images that were being posted to Flickr, and they will need a Flickr username, tag or internet address that can discover these photographs. (This presents the good security and privateness protections, the scientists claimed.)

Even though this restrictions the usefulness of the instrument, it is even now an eye-opener. Flickr photos make up a significant swath of the facial recognition knowledge sets that have been handed all over the web, together with MegaFace.

It is not difficult to obtain shots that persons have some particular connection to. Simply just by seeking by way of outdated emails for Flickr hyperlinks, The Moments turned up photographs that, in accordance to Exposing.AI, ended up applied in MegaFace and other facial recognition facts sets.

Numerous belonged to Parisa Tabriz, a well-known safety researcher at Google. She did not answer to a ask for for comment.

Mr. Gaylor is particularly disturbed by what he has learned by way of the instrument mainly because he when believed that the free of charge stream of info on the net was primarily a beneficial thing. He employed Flickr because it gave other people the correct to use his shots by way of the Creative Commons license.

“I am now dwelling the repercussions,” he reported.

His hope — and the hope of Ms. O’Sullivan and Mr. Harvey — is that organizations and federal government will build new norms, policies and laws that avert mass selection of individual info. He is making a documentary about the very long, winding and at times disturbing path of his honeymoon shots to glow a gentle on the issue.

Mr. Harvey is adamant that anything has to alter. “We need to have to dismantle these as soon as possible — just before they do much more damage,” he reported.